I think, for once, I got this right the first time. I’ve been casually shopping laptop stands for, oh, a decade or so I guess. It became somewhat urgent when the heat issues got bad enough that they all have vents on the bottom, fans, and still get rather hot at some points.

For me, the stand is primarily for use on the road. I don’t use it at home that much; I’ve got a desktop system, with a comfortable chair and a big monitor, for that. So one of the big issues is the space it took up in the bag. Also, I’d prefer one that doesn’t draw on the laptop batteries to run additional external fans (those also tend to be rather thick and hence hard to pack).

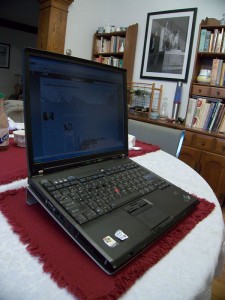

I finally found a company that had created the ideal solution to this problem—Koolsink. They make a simple aluminum sheet, bent into a narrow “J” shape (rather like a “j-card” for a CD jewel case), just the right thickness for the computer to fit inside it for storage, and to provide a little slant for use on a table. They make a number of sizes; do be sure to find the right one for your laptop!

As usual with such things, it’s a rather expensive sheet of bent aluminum. Worse, from my point of view, the shipping cost is nearly as high as the product (to the USA; they’re in Canada). And they don’t have retail dealers, they only sell direct.

I’ve now had it for a couple of weeks, though not for a road trip. It fits nicely around my computer in the bag, and works very well both on a table and in my lap to keep the vents clear and my lap cool. I would have to describe it as doing everything I hoped it would do (I admit, my hopes did not include world peace; or a pony).