It really probably doesn’t make any sense for businesses with deadlines to meet and a shortage of time to hack around with servers the way we do. On the other hand, we’re keeping quantities of data safe that many of those businesses couldn’t contemplate, for a very small fraction of what they pay for semi-enterprise servers. (Real enterprise storage, from Isolon or people in that tier, is safer, much more available, faster, and so hugely more expensive you can hardly imagine.)

A lot of this works because FreeNAS is built on FreeBSD and uses the FreeBSD port of ZFS. I originally started using ZFS when Sun first released it, using the free version of Solaris. After Oracle took over that became less practical, and after a while running old software I eventually converted to FreeNAS, and we build the rest of the Beyond Conventions servers on FreeNAS from the beginning.

ZFS and FreeBSD let us get away with skating closer to the edge. ZFS was famous from the beginning for finding disk problems in old toy systems (where a lot of people first installed it, to try it out without committing to it) and reporting them as clearcut hardware errors rather than very rare mysterious failures. That’s a good thing—it shows ZFS is zealously guarding your data (it has its own data block checksums, rather than depending entirely on the hardware the way conventional RAID systems do). And it lets us scrimp on disks, especially in the backup arrays. We’re actually using “white label” drives mostly in the backup array, and we need to have multiple redundancy anyway.

I would rate FreeNAS as a clear step above Synology and Netgear and Drobo and those players in the small-server market, both in reliability (unless you skimp too hard on the hardware) and in features.

Rebma 3, the disk server over at Corwin’s, in its current incarnation is an 8+2x4TB array, meaning double redundancy and 32TB usable. Fsfs 4, my server, has a 3x6TB mirror, so just 6TB usable, but that’s enough for photos and books and such, just not huge piles of video (which live at Corwin’s). Zzbackup is currently being used to build an 8+2x6TB backup array which, when the data is replicated to it (locally! it’s a bit big to replicate over the Internet), will come over here, the drives will be transferred into Fsfs (which has capacity for 13 drives), and it will be kept up-to-date via ZFS replication over the internet. My production array in Fsfs is already replicating onto Rebma over the Internet; so when the last step is complete, we’ll have this huge pile of disk with redundant local storage plus continuous off-site replication. The production servers, though not zzbackup, even have ECC RAM, one further little bit of protection for the data.

The new Rebma cost vaguely $500 excluding disks (we didn’t spend it all at once, Rebma 3 has the same motherboard, processor, and memory as Rebma 2, but a new case with more drive slots and much better cooling), not the $1450 a roughly comparable Synology server would cost. And I do think FreeNAS with ZFS has many advantages over the Synology software. In either case the disks are the expensive part at this level.

(I can’t resist reading “Synology” as a very clever brand name for “Chinese server”; I wonder if that has anything to do with it really?)

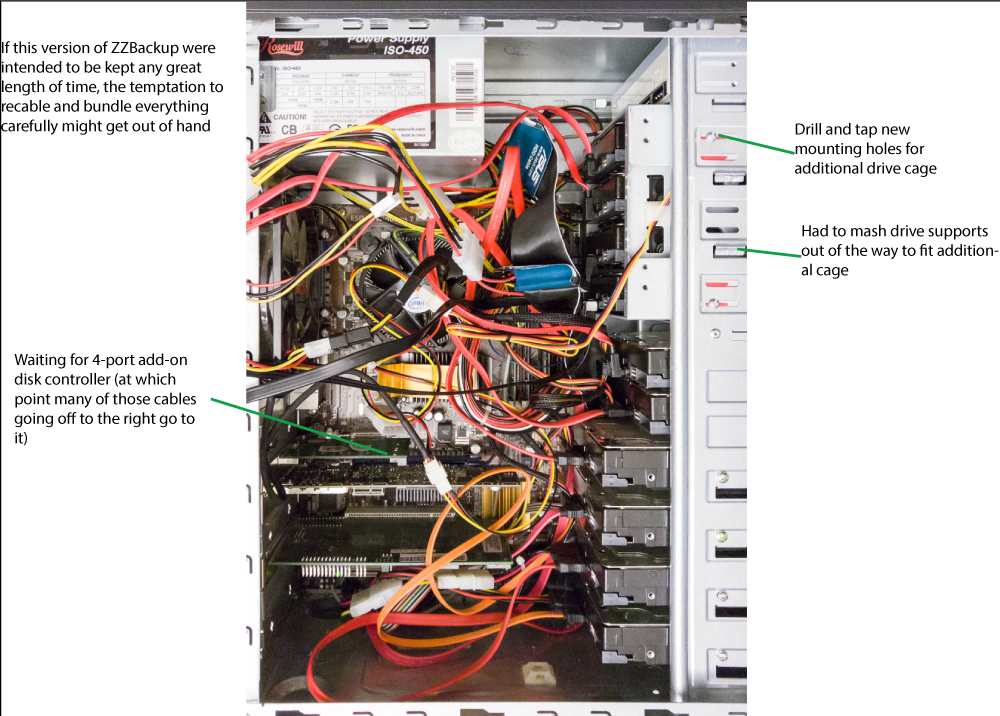

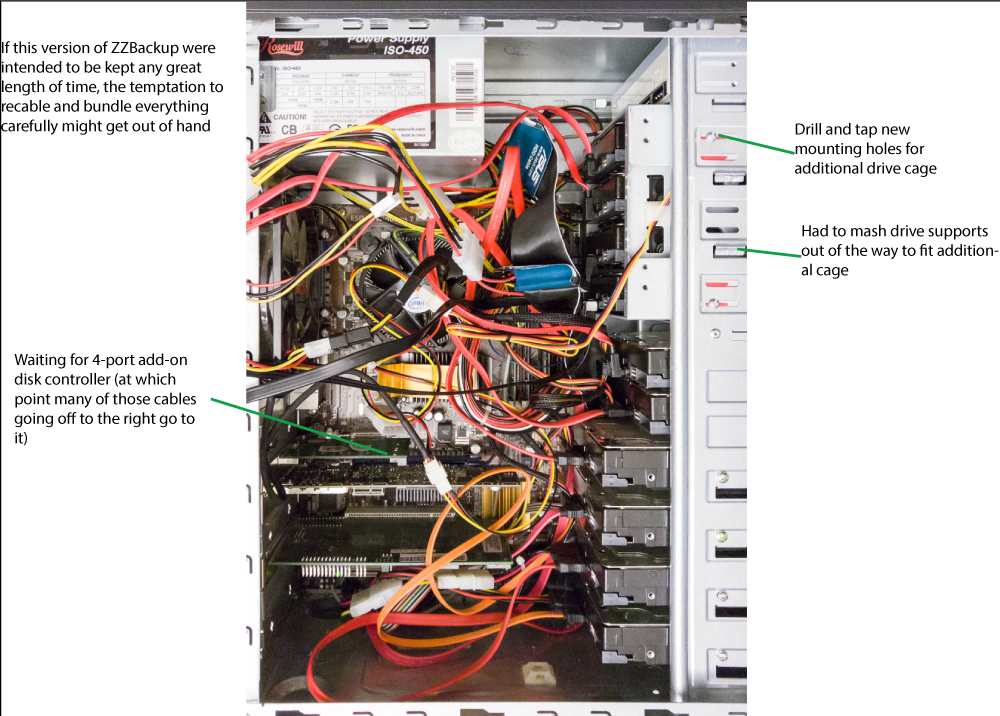

Here’s some of the hacking I’ve done while assembling the current version of zzbackup: